U.K. Research Funder is Latest AI Watchdog With Launch of a New Center

/A major U.K. foundation has become a leading supporter of research on the ethics and risks of advanced artificial intelligence, joining some prominent Silicon Valley donors in championing the cause.

When Stephen Hawking spoke recently at the opening of the Leverhulme Centre for the Future of Intelligence, he had a message of hope and caution when it comes to the development of advanced AI.

“In short, the rise of powerful AI will be either the best or the worst thing to ever happen to humanity. We do not yet know which.”

That may sound dramatic, but it’s actually a little less dark than his warning in 2014, when he famously said, “AI could spell the end of the human race.” Just in the past couple of years, researchers and funders have begun to take such concerns more seriously, and now a second philanthropy-backed academic research center has launched to wrestle with the issue.

Related: More Concern From Silicon Valley Donors About the Risks of Artificial Intelligence

The Leverhulme Centre opened this month at Cambridge University, where Hawking is also a professor, and brings together some of the top minds in AI research from, Cambridge, Oxford, the Imperial College London, and UC Berkeley. It’s funded with a £10 million ($12 million) from the Leverhulme Trust, a prominent British research funder that has embraced AI as a major issue.

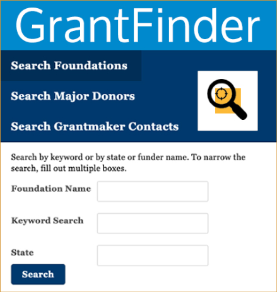

Leverhulme has been around since 1925, and gives the equivalent of close to $100 million annually through grants and scholarships for research and education. The trust is derived from the wealth of the founder of Lever Brothers, originally a soap manufacturer that would become part of the massive conglomerate Unilever. The funder is unique in that it doesn’t have explicit research priorities or strategies, leaving the topic of research up to applicants. It also has a lot of fairly accessible points of entry.

This is the second big piece of news on the AI watchdog front in just the last few months, as the Open Philanthropy Project, anchored by the wealth of Dustin Moskovitz and Cari Tuna, put $5.5 million toward the creation of the Center for Human-Compatible Artificial Intelligence at Berkeley. Stuart Russell of Berkeley heads that center, and will also contribute to research at the new Cambridge institute. There’s further overlap, in that the Leverhulme Trust was a funder on the Berkeley center as well.

The threats may sound fantastical, but considering the rapid advancements in artificial intelligence, which will presumably continue in coming decades, the resulting creations could drastically reshape the world. Hawking cites AI’s potential for reversing environmental damage and curing disease, but also for driving powerful autonomous weapons and further concentrating power.

One of the big concerns is making sure that highly intelligent computer programs share the best interests and values of humanity. One common hypothetical used to illustrate the problem is asking a robot to make some paperclips, only to have it turn the planet into a junkyard of paperclips because that’s its only concern.

But the Leverhulme Centre actually kicks off with nine projects, including said value alignment, as well as topics like autonomous weapons, policy making, and transparency.

One notable component of the endeavor is that the center is not just talking to AI experts. Its academic director and executive director are both philosophers by training, and the projects draw on specialists in law, politics and psychology.

We’ve noted before that AI is a ripe area for philanthropy, as a budding field that’s still highly unpredictable, and one that might seem a little out there for public funding sources. But its interdisciplinary nature is another big reason private funders should get involved, as such work can also also be tough to sell.

Corporations like car companies and tech giants are rushing to win the race for better AI, pouring R&D funds into the work. But I’m skeptical that they’ll be consulting with teams of philosophers and ethicists to make sure their work is beneficial beyond the bottom line. Hopefully, efforts like this one can set some rigorous ground rules.

Related:

- More Concern From Silicon Valley Donors About the Risks of Artificial Intelligence

- With an Eye on Profits, Another Car Company Gives Big for Campus Research

- $10 Million Isn't Much to Fight Humanity's "Biggest Existential Threat," But It's a Start

- Elon Musk Writes a Large Check to Help Tackle Humanity’s “Biggest Existential Threat”